15 May 2023

A team of researchers have developed a method to apply technology commonly seen in virtual reality to the remote, real-time operation of unmanned vehicles, making remote human-vehicle interaction more natural.

The group, from Xi’an Jiaotong-Liverpool University, King’s College London and the University of British Columbia, won the Best Paper Award at the 18th International Conference on Virtual Reality Continuum and its Application in Industry.

Remote human-vehicle interaction is a field of robotics dealing with how people interact with and control various machines, including unmanned aerial vehicles (UAVs), such as drones, and their land-based counterparts, unmanned ground vehicles.

One of the most common usages of UGVs is in search and rescue. This newly emerged approach allows a rescuer to conveniently investigate sites using a UGV based on the real-time picture transmitted by a UAV.

This is crucial when the terrain makes the transportation of personnel and equipment challenging. In such cases, UGVs combining aerial and ground perspectives can carry out large-scale searches with no blind spots, making them highly efficient.

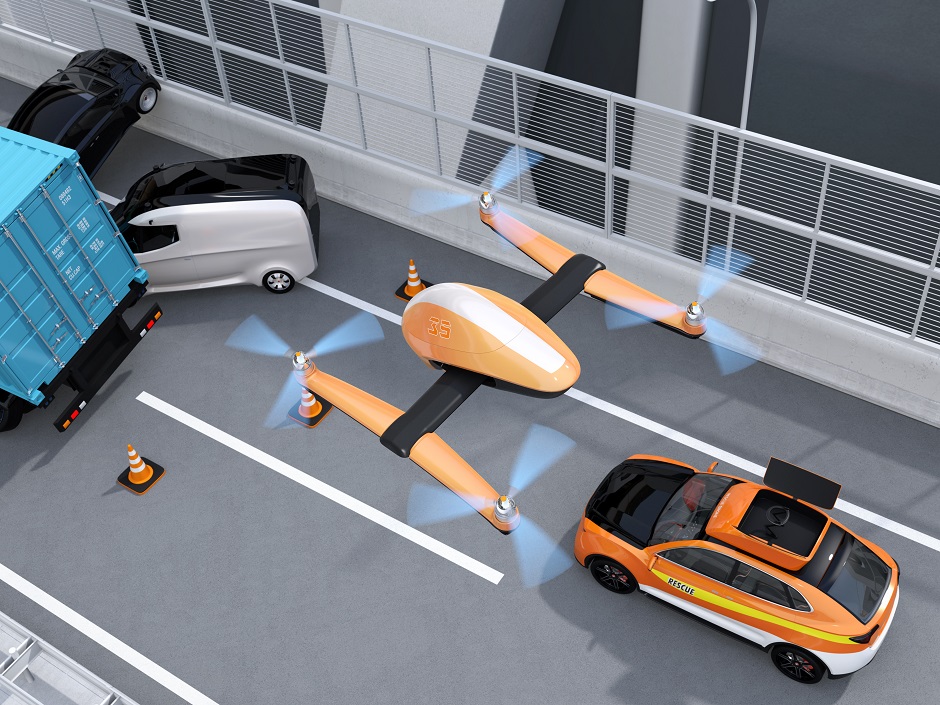

A rendering of a UGV (right) and a UAV (centre) working in tandem at a rescue site

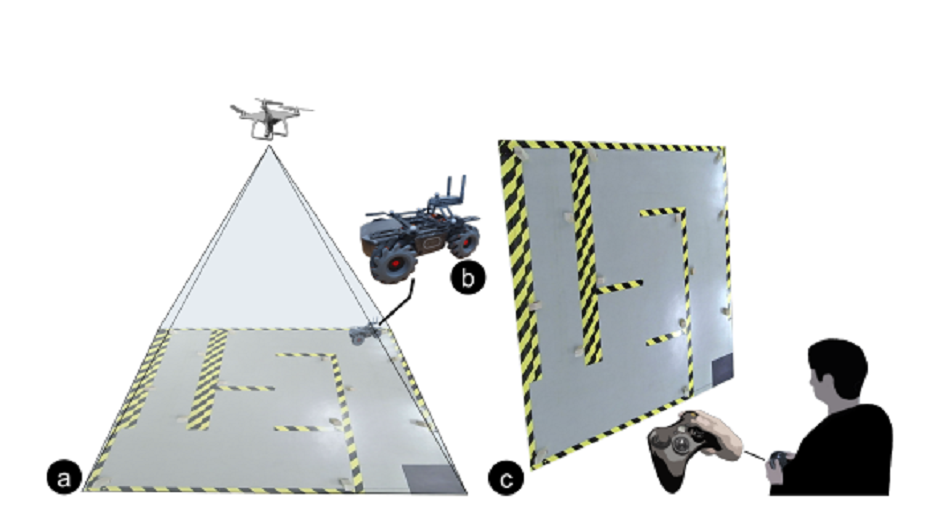

However, the traditional method of operating UGVs, namely using two joysticks, has some limitations: the angle of the UAV’s camera is fixed, meaning the perspective of the map doesn’t change when the vehicle changes direction. This can make operation difficult. For example, when the UGV moves from the top of the map down, some operators will find it hard to distinguish between left and right.

The traditional method of using joysticks to control a UGV. Users sometimes have trouble distinguishing left from right when the vehicle moves from the top down.

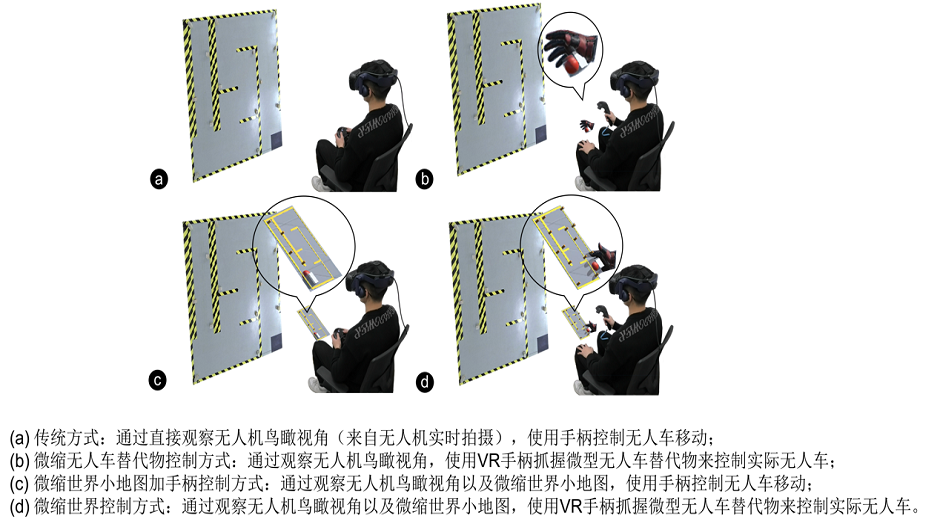

To solve this problem, the research group applied a virtual reality technology known as world in miniature (WiM). This gives the user a first-person perspective by showing a smaller version of the layout, which moves with the vehicle.

The technology enables operators to control the UGV better and move it to the designated location using hand gestures such as grasping and moving using VR devices.

(a) Traditional method: Control the UGV with joysticks by observing a real-time bird’s eye view transmitted by the UAV.

(b) Control the UGV with a VR controller by observing a real-time bird’s eye view transmitted by UAV.

(c) Control the UGV with joysticks by observing the bird’s eye view and the miniature map at the same time.

(d) Control the UGV with a VR controller by observing the bird’s eye view and the miniature map at the same time.

According to Yiming Luo, the paper’s first author and a PhD student at the School of Advanced Technology, human-machine collaboration has attracted wide attention worldwide, and their research focused on developing a user-friendly interface enabling more convenient and accurate remote control of UGVs so that they could better serve people.

“For a long time, one of the biggest pain points in the remote control of robots was signal transmission, which causes problems such as signal delay. As communication technology matures, these problems are being solved.

“However, the design of the remote-control interface is still in its infancy. It has gradually become a focus area that needs to be improved as communications and hardware has developed. Better experience and efficiency of human-machine interaction is the focus of our research,” he explains.

Jialin Wang, the paper’s second author and also a PhD student at the School of Advanced Technology, says: “There were many variables when we tried to realise our idea, and we had to analyse, investigate and try each possibility, which allowed us to accumulate a lot of practical experience.

“We really hope this technology will have more applications in the future and contribute to fields like search and rescue, agricultural measurement, TV and filmmaking, and more,” Wang says.

Luo and Wang are co-supervised by Professor Hai-ning Liang and Dr Yushan Pan, both at XJTLU’s Department of Computing.

Dr Pan says: “Our team’s interface allows users to control UGVs more flexibly and accurately in the top-view perspective, and the research paper also provides a unique insight for researchers worldwide in areas such as natural human-machine collaboration and remote control.”

Professor Liang says: “With their cross-disciplinary research using robots, remote control and VR, the students proved that remote control of UGVs can be achieved even without computer monitors, keyboards, mouses or joysticks. The introduction of WiM makes the remote interaction between humans and UGVs more natural.

“This research is a meaningful contribution to the remote operation of UGVs and the driverless car industry,” he concludes.

By Huatian Jin

Translated by Xiangyin Han

Edited by Patricia Pieterse

15 May 2023

RELATED NEWS

New 3D-printing ink could make cultured meat more cost-effective

Cultured meat (also known as cell-based or lab-grown meat) is a promising, more environmentally friendly alternative to meat produced from traditional livest...

Learn more

XJTLU researchers use new material to improve solar cells

The current global energy crisis has emphasised the urgency to reduce our reliance on non-renewable power. Solar power is one of the best strategies for gene...

Learn more